Appearance

Maya to Houdini

This section desperately needs editing. I'd skim it.

I did a first version of this page after working on Happy Feet 2 in 2011, using Houdini in a very limited context in the lighting department. In 2014/2015 I've been lucky enough to use Houdini in a broader context on Insurgent and Avengers at Animal Logic, learning from some of the best Houdini artists around.

As such it seemed time to update this little tour. I'm hardly an expert (hell, it took me years to feel halfway competent in Maya, and I've been using it since maybe v2 in 2001 or so), but I'm now fairly confident of what Houdini is and isn't good at. And while it shouldn't be a competition, I imagine folk reading this will be like I was several years ago; sort of intrigued by what Houdini offers, but wary of a big scary jump from the safe Maya waters. As such, this is a competition, and I'll try and point out why it's worth learning.

It's also a big wall of text, sorry about that.

Why bother?

Check if any of these situations sound familiar:

- You've built up a reasonably complex scene in maya, thought you might want to edit the hypergraph network of your geometry, and winced

- You know that there's going to be changes to your geometry, which will involve a lot of uncomfortable and unwanted work to incorporate that into your scene

- You've tried referencing and been bitten by any number of its bugs and problems its supposed to solve

- You've tried mel and python, and while it works, thought 'surely there must be a better way than this?'

- You can see an idea for a deformer or plugin in your head, but the thought of going to learn C++ to implement it is way too much work

- You've done a bit of particle expressions and thought 'this is kind of interesting, but its a little old and cruddy, surely there's an update to this sort of data manipulation?'

- You've sworn at the connection editor one too many times for making seemingly simple A->B node connections difficult

- You've wondered why it takes a minimum of 3 nodes behind the scenes to create a cluster, or 2 for a lattice, or any number of curious intermediate nodes that seem to serve no purpose

- You've used the poly selection editor and thought 'this is nice, why can't I use this procedurally to do stuff rather than it being purely modal?'

- You've got 80% of an effect quickly, then realised you don't have enough control or buttons to push to take it that last 20% easily

- You've wondered why paintfx feels so disconnected from fluidfx, which feels disconnected from xgen, which feels disconnected from ncloth, which feels disconnected from...

- You've downloaded scripts from highend3d/creativecrash to do seemingly fundamental pipeline things

- You've looked in dismay at bugs that have been in maya since v2, and chortled at the thought that even in a best case scenario, as a paying customer on full support, and you find a red alert bug, the best you can hope for is a service pack update that might fix it in 2 months

- You tried rigid bodies and were lucky to get 20 blocky shapes to work effectively

- You've started to get interested in the fundamentals of 3d maths, but felt that using MultDivide and IfThen shading nodes is probably not the best implementation of said maths

- You've wanted to start pushing the boundaries a little, and its time for a change

(Wow, that list came out a lot more bitter than I expected...)

Houdini's Core concept: Points with data, manipulated via clean networks

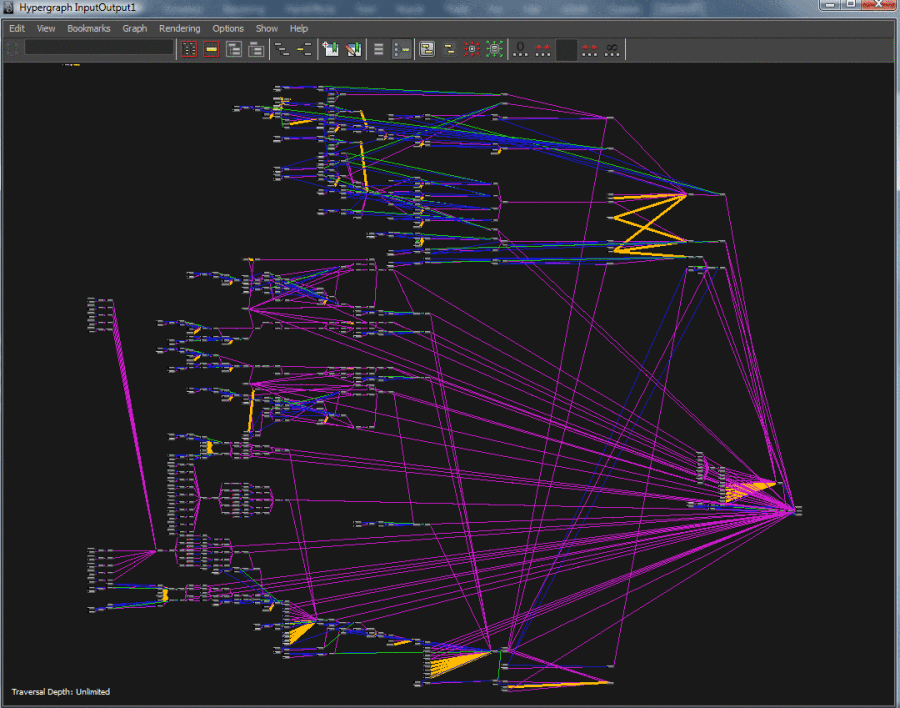

Here's a typical maya network:

Connection wires everywhere, hard to read, hard to edit. There's no point trying to organise it as it'll just auto-layout as soon as you change any single node, unless you're a power user (or glutton for punishment), you generally don't go fiddling around down here.

Why is this? One possible explaination is that the mesh data is simple; verts store their vertex position, and that's it. If the mesh data is simple, then the 'logic' of your scene setup has to be carried on the network. This might be ok if the nodes just had a single input and single output, but maya nodes definitely don't do this; a standard deformer will need inMesh and outMesh connections, messages, worldspace connections to manipulaters, deformation clusters, membership lists etc. Add to that the extra nodes to store those selection sets and intermediate nodes, its no wonder maya networks end up looking as they do.

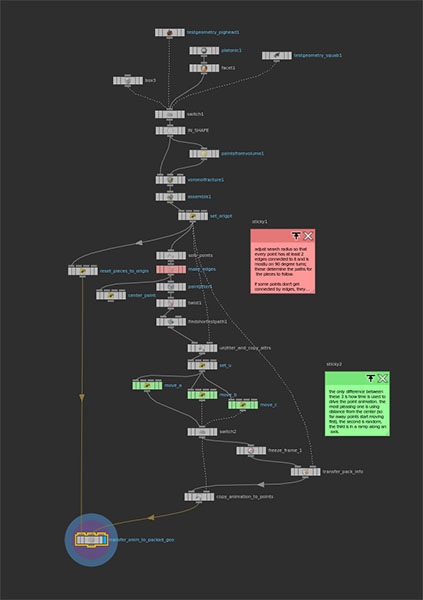

Compare this to a (admittedly simple) houdini network:

Connections are clean, node layout is remembered, so it encourages you to arrange things nicely and put in notes, you can follow it from top to bottom pretty quickly and get an idea of whats happening; its similar to reading a Nuke comp.

If you look closer, most nodes have one input and one output. Those that take 2 inputs are usually merging geometry together, or if an output splits its to do interesting things, and merge those results back later.

A key reason Houdini can have such clean networks is that it allows for extra data to travel with the mesh. More specifically, while a vertex in maya usually only stores its position, a point (Houdini talks about points more than verts) will store its position, but might also stores its colour, what selection sets it belongs to (called groups in houdini), its velocity, scale, normal, or whatever other data you want to put on those points.

Similar to Nuke and channels, this means that logic that might be difficult to represent if the mesh can only store position, becomes much easier if it can store other values. The maya analogy here is particle expressions. If you could could only modify position they wouldn't be much use, but a maya particle td will start storing rgbPP, speedmult, rand_this, foo_that... having 'smart' geometry makes certain things much easier.

It's not just points that can do this, polygons, particles, voxels, objects themselves, they're all capable of storing whatever data you want, to be manipulated later.

Another Nuke analogy comes in useful here. A grade node by default will affect the entire image, but if you specify a mask (and the mask is being carried through with the image data), then the grade effect will be limited to a certain region. Similarly, most Houdini nodes will affect all points on a mesh, but some node parameters let you specify point/poly data, which will modify their behaviour. Eg, a polyextrude will extrude all faces equally, but if you have a 'extrudedistance' attribute on the polys, then the extrusion depth can be set by that instead. Or a curve-to-tube node might set the tube radius based on the curve colour. Or a softbody lag attribute driven by a 'lag' value per point. This is incredibly powerful.

So again for emphasis:

Houdini geometry can store arbitrary data. This makes node networks cleaner, and nodes can read, act on, and manipulate that data.

Houdini's other Big Idea - Vex

If Houdini's core idea was just 'smart data and clean networks', it would be great (and in fact a lot of Houdini folk get by with this quite happily in their day to day lives). But the other side of Houdini is Vex.

Vex is the language that underpins most of Houdini. If you've used mel or python and thought 'yeah, another language, so what', a key difference is speed. Mel and python can be achingly slow, as scripted languages often are. It's better to compare vex to javascript in modern browsers; it's incredibly fast, getting near the performance of native C++ code a lot of the time. In fact it goes beyond javascript in that its multi-threaded by default, so if you run a multi-core machine, performance gets faster again.

A usual trade off for this sort of performance is the time spent compiling code (eg, using xcode or VC++ to create a plugin, compiling can take minutes each time you make a change). Vex compiles very quickly, usually so quickly you barely notice it, so the default behavior is for vex to compile automatically for you, the end user barely even notices this is happening.

Because it performs so well, you can use it to deform huge amounts of geometry. Eg, something simple like adding a sine wave to 2 million points runs at 24fps on my 16 core workstation.

Another common trade off would be language complexity, but vex is remarkably clean considering how powerful it is. Javascript is a dogs breakfast, C++ is alien, blinkscript in Nuke looks like C++ but somehow worse. Vex is very clean (Get a sample of that over on the HoudiniVex page).

Of course, not everyone wants to code in Vex, and that's fine. That's why we have vops. Vops is visual coding of vex. Similar to using hypershade, you get a network editor, put down a multiply node, an if node, a ramp node and so on. While in maya each of those nodes is actually a little C++ plugin, in Houdini each of those represents a line (or two) of vex, which is written and compiled for you behind the scenes.

Further, when you understand vex and vops, they are used everywhere in Houdini. Shaders are vex. Particle sims are vex. Fluid sims. Geo deformers. Camera lenses. Wire solvers. 2d compositing operations. There's so many places where if the mood strikes you, you can double click on a thing, and be presented with the vex code or vop network that makes it work.

Once you get a handle on Vex and Vops (and if you've used hypershade or any basic deformer stuff in Maya, its very easy to get into), you wonder how you ever got by without it.

So that's the high level sell. What follows is more of a random walk through bits of the Houdini vs Maya mindset:

The nitty gritty

Workflow

Lets get the high level question out of the way. Obviously if you do any kind of sim work, Houdini is for you. Houdini excels at fx and simulation, but while the core sim tools are great, its the workflow that sets it apart from Maya, or Max, or any other competitor. Node networks are clear and easy to read, the ability to cache out to disk at any time is incredibly handy; keep multiple versions live and easily switch between them, or blend versions, or use takes to try different options and clearly track what's different, or do wedge tests automatically... all stuff that you generally grimace and sweat through in Maya, is built into the base workflow of Houdini.

Similarly, once you stop trying to map Maya workflows onto Houdini, and go with the procedural flow, things go so much easier. My memory of using maya for many years is something like this:

Do this do that, delete history. Modify modify, delete history. Save a version. Delete history. Blendshape, fiddle, delete history.Realise you have to keep history now, deep breath, start stacking modifers. Click click... oh no, they're in the wrong order. Sweat.Try and changer order. Swear. Go back some versions. Panic. Swear, delete history, try again. Break it again. Decide to write script to automate network creation. Swear you'll never do this again. Deliver job, on to the next.

Compare this to my general thought process these days in Houdini:

Load model, start putting nodes down. Realise node order is wrong. Drag node out, put it in the right place. Get to a dead end with that technique. Move that tree of nodes off to one side, put a post-it note next to it saying 'v01, has potential'. Start halfway down that node tree, branch a new node. Node node node. Realise something from the first branch could be useful. Merge the branches. Do something silly, realise the system is getting a bit slow. Put down a cache node, let it play through, now its fast again, continue. Node node node. Wonder if I really need the middle 10 nodes.Select them all, disable them while watching the end result. Enable. Disable. They're not needed, drag them out, delete. Decide I want to compare v01 branch and v02. Pull both into a switcher node, toggle between them. Hear that the model has been updated.Go to the first node, change the filepath, go back to the end node, keep working. Decide little 4 node collection in the middle could be quite useful for other purposes, collapse into a subnet, convert that into a tool, now its available as a virtual node at a later date.

And so it goes.

Its a much more fluid way of working, again the comparison to nuke is valid. You don't get punished for having a huge construction history of nodes, if anything its actively encouraged. Because the node networks are clean, you can experiment, connect odd things together, branch off tests, merge back in. It's a sketchpad for doing 3d, that can scale to incredibly complex shots, but never feels like the 'this is a series of menus and buttons you must click in this sequence to get work done' that maya suffers from.

Houdini rewards you for going a little slower to start with; rather than select objects manually, maybe you use a wildcard based on its name on disk. The vertex (or point) selection might be determined programatically by comparing their normal to a null, then take a random subset of those points, then check if they're below the ground plane.

One more story before moving on; I was discussing techniques today with a few maya lighters, and showing them how I'd approach a problem in houdini. I did something I thought was obvious; there were a bunch of shapes bunched up at the origin, each with a unique number ID, I wanted them laid out in a line. I did the seemingly obvious, and did this by writing a little expression to add the ID to each objects x-position. The maya folk laughed and said 'that's such a Houdini way of doing it'. I was momentarily confused; what other way would you do it? Manually? There was no other handle or identifier, and this way ensured that if the number of models changed, the layout would stay consistent.

Sure it might've been easier to just drag the 10 models into place, but that's a key difference; once you start thinking procedurally, you start thinking of every process or challenge in terms of recipes, steps, workflow. The inevitable truth is that you always end up getting a model update, a client note, a glitch that needs correcting. Why not take that little bit of extra time to come up with a hands-off workflow, to save you time in the future?

Integration

Maya tries hard to unify the various modules, but its an uphill battle. Xgen, bifrost, paintfx, nucleus, fluidfx, mentalray, these feel like totally disparate apps that have minimal communication with each other. Too often is the answer is brute force; convert to polys, delete history, push to the next module that has a totally different methodology to the one you just left. That's even leaving out older or abandoned modules (software renderer, heirachical subdivs, visor, old cloth, hardware render buffer, curve flow...)

Houdini has a few creaky corners, but for the most part, it all feels tightly integrated. Create some geo, tag the surface with some attributes, deform or create new geo from those attributes, send the parts that aren't tagged to a particle sim, make that drive a fluid sim, create a shader to render the fluid, but can make the fluid glow based on the tagged attributes waaay earlier in the nodegraph, and preview that in IPR in the renderer. That's trivially easy in Houdini, to the point that you barely think about it, its just expected; all the parts talk to all the parts. You only notice its missing when you go back to Maya.

Atomic vs monolithic

A few times I've gotten an interesting result with paintfx, maybe 80% of the final look, only to realize that I'm now paint(fx)ed into a corner, and there's nothing I can do. Similar with fluidfx; while it has a crazy amount of dials and sliders on that monolithic attribute editor, if your requirements don't fit, then you're stuck. Maya's approach could be generalised as fewer monolithic nodes, with lots of controls.

Houdini favours the reverse; lots of atomic nodes, with (relatively) fewer controls. A lot of these get pre-packaged up as shelf tools, so the houdini equivalent of a fluidfx sim might look like 3 or 4 nodes, but dive inside them and you'll find 10 more, and some of those have 10 more underneath, some of those have 5 more underneath too...

While that's overwhelming at first, it means that you're rarely locked in. Yes the more advanced stuff takes time to learn, but the reward is a lack of restriction. You're not expected to hand-wire your own fluid sims, but if the time comes, its nice to know the functionality is there if you need it.

Discoverability

Related to the previous point, Houdini rewards the curious, Maya does not ('punish' is a strong word... 'humbles' perhaps?) Peek behind the curtain of maya and look at the graphs it creates for seemingly basic things, its a mess back there. It's functional, but it's not designed to be played with. Mel and python isn't much better; the hundreds of scripts behind the scenes are largely to there to wire nodes together for you, or create UI.

Quite often in Houdini you'll think 'hmm... that tool... how does that work?'. Double click it to reveal its contents, and there'll be a nicely annotated node graph to explain. Or several help examples showing different ways to use a tool. Or highly optimised vex code to achieve a certain effect (vs maya where code is usually to connect nodes or create ui). Poke around, find interesting things, feed it back into your work.

Depth

The thing that causes frustration at first ('why are there so many damn nodes? how can anyone know all of these?') becomes a fun game of discovery over time ('I was using 10 nodes to do a thing, then found houdini has 1 node to do the same thing!'). There's always another node, always another interesting trick around the corner. The unix/windows analogy fits well; unix is designed to be lots of small programs, each of which do one thing well, that can be connected together to do powerful things, while windows is meant to do 90% of what 90% of people want, quickly. There's a bit of a learning curve to unix (and houdini), but the reward is worth it.

Mantra

I used maya and mentalray for a long time, then prman and 3delight (with houdini) after that. Mantra makes me realise how many brain cells I wasted over the years. It's so reliable and knowable, I've forgotten what its like to not have faith in your render engine. Throw crazy amounts of geometry at it, global illumination, millions of particles, volumes, all raytraced, it'll render.

Sure it has downsides; not blazing fast for simple things (but evens out and even jumps ahead of the competition as scene complexity increases), doesn't do GPU acceleration, no shader balls, ipr can be slow and twitchy, and optimising is a black art. None are showstoppers though.

What also impressed me, and I take for granted now, is how much you can customise shading, and the ease with which you can do it. I thought I was pretty good with hypershade, using ramps and samplerinfo nodes to do silly things. That's 10% of what you can do with mantra and its shaders. They can be as simple as a lambert, or a full visual programming setup where you pull in point clouds to represent thousands of textures on disk, driven by attributes of your geometry, which selects between 3 different sub-shaders, flipping the raytrace mode depending on distance to camera etc etc..

Its fun!

Maybe it's because I'm still learning, and because I used Maya for so long, but I find Houdini to be fun, while Maya feels like work. Then again, I've used Nuke for a few years now, and that still feels like fun, so....

There's more reasons, but I gotta get on with the rest of this document!

Houdini per department

Some quick notes.

Modelling

Houdini probably isn't ideal for speed modelling a jeep/character/pack shot for a commercial. I know modellers like to bind key operations to hotkeys so they can model as fast as they thing, the Hoduini workflow doesn't gel well with that process. In the previous version of this document I also said some of the modelling tools are a little old, specifically calling out bevels, booleans, split/divide. Amusingly as of H16 they've all been replaced with brand new super versions, booleans especially are now remarkably stable and fast.

So while I still wouldn't recommend building stuff from scratch (though there are several modellers now doing exactly that), Houdini makes a great 'modelling amplifier' tool. As soon as a modelling project morphs to something that could even be vaguely procedural (make this model out of lego, create us 20 plant variations, 5 building types, model rubble for a dystopian scifi landscape), it's awesome for that. The alembic import/export is pretty good, and are represented like nuke style read/write node, so if you plan your workflow, you can do the base stuff in maya, have a houdini scene that'll do crazy stuff to geo, and write it back out again.

And of course, if a project is procedural in nature (modelling neurons, or cyberspace, or random abstract forms, or meshed pointclouds etc), it's great.

Animators

Again, probably not. Rigging tools are there, but a bit rudimentary. If you're the kind of person who does a lot of custom rigging, fancy python procedural blah to make curves do xyz on certain conditions, might be worth a look, vex allows you to prototype deformers and ideas very quickly.

Like for modelling, Houdini can be a great data amplifier for animation. Import a cache or fbx from maya, and use is to make a crowd, or generate lots of variations, or filter it like audio through CHOPS, or use it to drive procedural animation, or or or...

The truth of it is that until recently, rigging and character animation just haven't been areas of interest for either SideFx or the majority of their customers, but it looks like things are changing. A few former XSI developers now work for SideFx, one of the more experienced rigging and character FX TD's now works for SideFx, and they've teamed up with a very character animation focused studio called Shed to do a short film completely in Houdini. It'll be interesting to see what v16 brings.

Motion graphics

I wager its a good fit. C4d is fast and super capable, but if you're using tools like MASH, but find yourself limited both by the speed and flexibility of maya in this field, Houdini excels.

Lighting

Definitely worth a look. Mantra is a great renderer, the render pass management and shader authoring is great, and lets you get under the hood way easier than any other lighting/shading package.

There's been work in the last few versions to allow houdini to handle bigger and bigger scenes through stylesheets, ala katana. The end goal appears to be that you'd have massive alembic assets of your entire scene, but like Katana, you never load it into Houdini. Rather you just load bounding boxes if you can get away with it, and you can inspect the structure of the alembic files and assign shaders and render properties via stylesheets, but you never pay the cost of loading heavy assets into memory until the renderer requires it. Still some work to go on this front, but the potential to be very powerful.

Generalists, students

Sure, why not? Main thing I've found with Houdini is it's strengthened my fundamentals of 3d, because you end up manipulating stuff a lot of the time at a very low level, yet in an approachable way. It also gives you nice baby steps to increase your knowledge; at first you're doing simple expressions on high level objects, then a bit of vops for basic position, then colour theory, then vex, then a dabble in matricies, then lots of matrices, then that leads to understanding space transforms in shading, which leads to understanding light shaders, which leads to... it goes on and on, a great way to learn by doing.

Fx

Well dur.

Learning Houdini

Houdini was one of the first apps to provide a free non-commercial version, it's still going. It's restrictions are very minimal; it saves to its own format, puts a tiny watermark in the corner of the interface, and a larger watermark on renders. It can load files from the commercial version, but going the other way will drop the commercial license back to the learning one until you quit.

There's quite a lot of learning material online, most of it free. Not many books last I checked, the 2 I have are kinda old and out of date (but if you must get one, 'Houdini on the Spot' is good).

Sidefx themselves provide hours of video tutorials and masterclasses, Peter Quint has a huge collection of videos on vimeo, and sites like 3dbuzz, fxphd etc are always running something or other.

The help within Houdini is quite good too; most nodes have at least one example you can launch directly into your session, some like cloth have 10 or more examples.

Thing is, once you get to a certain threshold of understanding, Houdini becomes somewhat self documenting. Load an example, step through the nodes, get an understanding. Unplug something, connect it somewhere else, see what happens. Once you understand the UI (which considering the depth of houdini is remarkably simple and clean), you can just poke around and play.

The Odforce forums are also a great resource. People often post questions there, the puzzle-solving nature of houdini means someone usually answers within a day. Something else that happens with Houdini and less with Maya is people often post little example scenes; the node wiring workflow seems to encourage this. I'm one of those folk lately posting answers when I can, I'm finding it's a great way to learn.

Sidefx staff seem to travel the world every few months, offering workshops wherever they go, or organise local studios to do talks. There's a friendly, sharing, 'lets all do this together' vibe that is missing from the Maya community for some reason.

Terminology - why does Houdini keep talking about points and not verts?

Best to explain that with a counter example; why does Maya treat particles and verticies as two different things? Or if you're gonna go all the way, whats the difference between a particle, a vertex, a curve cv, nurbs surface cv?

The answer is nothing. A particle is a 3d position in space. A vertex is a 3d position in space that is connected by edges to other verticies. A CV is a 3d position in space that is connected to other CVs to define a curve, etc.

Houdini takes that common description, 'a 3d position in space', and treats it as its own, lowest level data type, a point. Points are then connected together to define higher level primitives, so 2 points get connected to create an edge, link 3 or more edges to create a polygon, stitch 2 polygons together to create a mesh (those stitched points are referred to as verticies, which helps confuse maya folk). Each level of those can store data and be manipulated in certain ways, but because you know you can always treat everything as 'just points' regardless of what is built on top of it, it becomes the default way to mess around with stuff in Houdini.

basics: maya transforms and shapes vs houdini /obj and sops

Hopefully you get the difference between a shape and a transform in maya. A shape can be a simple poly shape, or it can be the result of many operations in the construction history of a shape, which ultimately outputs a shape. The transform takes your shape, and moves/rotates/scales it in the world.

Something you might have noticed is the way maya displays the transform -> shape -> construction history is messy and inconsistent. The outliner is very transform centric, and by default specifically hides shape and construction history nodes. If you enable them, shapes appear parented under transforms, but history nodes just get thrown into a huge list after the transform nodes. The hypergraph lets you toggle between the transform node view and the DG node view, but it resets its layout each time you switch, and the connections at the DG level can get VERY hard to follow... the general vibe is 'this is complicated. Look, don't touch'.

Houdini has the same basics, but the methods for displaying and interacting are different in a few important ways. Transforms are treated as containers. Inside those containers are the nodes that are wired together to make a final shape. The final node is the shape, and that is what is moved by the transform. In essence that's the same as maya, but while maya implies it via the outliner and hypergraph, Houdini makes it explicit, and it does this via how things are displayed in its node graph. By default the node graph displays all the transforms, similar to hypergraph. Double click on a transform and you dive inside it, revealing the nodes of its construction history.

Once inside the node network for an object, the difference to maya becomes more clear, in fact becoming more like nuke. While maya uses nodes, and has a few kind-of-ok tools for modifying them, houdini and nuke assume the user experience is ALL about nodes, so both offer similar important features:

- the node layout is saved, so when you return, its as you left it

- you get post-it notes, backdrops, node colour tools to help you tidy and navigate your node graph

- data flow is kept as thin and clean as possible between nodes, so it should be easy to follow how data moves

- you can branch node networks, disable many nodes at once, rewire this to that... you can experiment with nodes in a way maya makes difficult, almost impossible

It's that last point that's quite interesting; whenever you have to dive into the construction history of maya, its with a grimace and the script editor close by. Nothing is easy, lots of stuff requires scripts to be wired together properly, its effort. Houdini actively encourages you to dive in, try adding another node, disable that node, see what happens, add a note saying 'this could be made better...'. It encourages a playground environment for node operations that maya lacks.

Also, similar to how its easy in nuke to take a few nodes, add a few parameters, then collapse it into a tool or gizmo you can re-use, houdini lets you do exactly the same. The clean self contained node networks, the core concept of collapsing/expanding containers, means you can create a little node network to achieve a certain effect, drag it to your shelf, and know you can re-use it later without writing a line of code. The barrier for developing tools and features in houdini is MUCH lower than maya, where you really need a reasonable level of mel or python to be productive as a TD.

Rops vs render globals (esp render dependencies)

The 'nodes are important' motto extends to rendering and render layers. Unlike maya which (until recently anyway) had a single render globals window, Houdini uses another node view, where each node represents a render process. Each node contains what you'd expect a render engine needs to know; a list of objects, a list of lights, a camera, frame range, render settings etc.

So if you have a render represented as a node, what does wiring them together do? This creates a dependency, so if you ask the last node to render, all the previous nodes in the graph will be rendered first, one after the other. A typical render node chain might involve first baking shadows, then an indirect point cloud, then branch off into seperate bg/mg/fg render layers. You can even insert controller nodes into these trees to further dictate how the nodes render; frame by frame for one node, or make an entire branch of render nodes do the entire framerage first, and so on. Its very powerful.

takes vs render layer overrides

So if each ROP node takes the place of a render layer, how do render layer overrides work? Houdini has this split into a separate system called 'takes'. Functionally its almost identical to render layer overrides; you create a new take, then associate object attributes with that take, and change them to your bidding. Switching to another take will change those values back to their default. In terms of implementation, maya watches for attribute changes automatically, and makes their title orange to show they've changed in that renderLayer, ie, watching for changes is always implicitly 'on'. Houdini goes the other way; by default when you switch into a take all parameters become grayed out. The idea being that if they're grayed out, their at their default state, and aren't affected by this take. You need to right-click on a parm, choose 'include in take', and it becomes active and able to be modified. Alternatively you can turn on 'auto takes', which will immediately link parameters to a take.

A few niceties of houdini takes vs maya render layer overrides; you can open the take list panel which shows you all the parameters associated with each take. you can parent takes to takes within the takelist panel, so that you can have one set of basic overrides, and many other takes will inherit from that take. Takes are intentionally separated from renders because they're useful for general work; hence the name, 'takes'. You can setup a scene, then, movie set style, go for 'take1', and edit the scene non destructively, then create take2, do another run of changes, take3, take4...

To associate these takes with a render, there's a 'render with take' drop-down on each render node, where you specify which take to use.

Interestingly, I never use takes these days, nor do most folk I know. I'm doing almost no lighting work these days which is probably a large part of it, but generally speaking if i want to see a variation of a thing, I won't use takes, because then its not visible in the network. if its not in the node view, it feels non-houdini. What is more common is to branch off another part of the network, make changes, and name the output clearly as 'OUT_SHADOW' or whatever its meant to represent. The rop thats meant to do the shadow pass will refer to this object instead, no takes required.

Houdini viewport shading vs maya viewport shading

I used to say maya had the advantage here, now I'm not so sure. If you watch the maya demo videos they make great use of viewport2.0; fancy shaders, shadows, occlusion, particles, volumes, all running at 30fps, isn't it awesome. In practice, I know very few folk who are running VP2.0 for work, it's so glitchy and there are so many caveats with how its used. My quick straw poll of walking around and looking at maya on desks, is most people are still using the legacy viewport in wireframe mode.

If you look at the houdini demos, they push a similar angle; cool shaders, realtime lights and AO, all the mod cons. Unlike maya, I've seen people work this way in production. A key difference is that there's no old-vs-new viewport mode, its all unified, making reasonably high demands of your graphics card, but nothing an nvidia card made in the last 4 years can't handle.

A thing maya does well is to represent procedural shaders in the viewport. If you're patient, you can run ramps into layered textures, over procedural noise and bitmaps, and you can see that in maya's solid mode. Houdini will happily let you display complex colour data if its in the geometry, but if you're doing complex mantra shaders, then you can't see that in the viewport. This is simply because vex, the language that underlies mantra shaders, is capable of way more things than GLSL can, so it'd be difficult to port such things across automatically.

If you stick to the standard shaders, houdini fares better. The recent push to simple physically plausable rendering means that the base shaders just have a few simple sliders, and slots for diffuse/spec/bump maps. Sidefx have implemented GLSL approximations of these mantra shaders that will automatically use the same texture paths you define for your mantra shaders. v15.5 will even do viewport displacement if you want. Environment lights do nice realtime dome lighting (with support for hdri textures), area lights are loosely approximated.

If you're super keen to get your awesome mantra shader to display in the viewport, you can write GLSL directly inside houdini, and have it display immediately. It's esoteric and underdocumented, but it's there should you want it, with less hoop jumping than maya.

A constant annoyance with Houdini is viewports glitching out. Sometimes geo will get 'stuck' the viewport, even if you delete it. This can normally be fixed by just creating a new 3d viewport window, but still, annoying.

user interface

much more configurable than maya; multiple floating panels, or everything docked together, or several duplicates of the one panel, do what you want. Like nuke though I tend to run fairly minimal; big node editor view, smaller 3d viewport with tabs behind it for render view and others, and below it a smaller tabbed section for python prompt, attribute spreadsheet, hscript prompt.

alt-' or ctrl-b will maximize a viewport, takes a while to stop hitting spacebar...

python, drag anything into the console

something that irked me for a while is that there's nothing like maya's mel output window in houdini. Ie, everyone seems to learn mel the same way; you do a sequence of actions, look at the mel editor to see the commands that have been echoed, copy them, and start working up a melscript to do your bidding. this works because the 99% of the maya UI is written in mel; its what makes it so flexible (and also so slow...). Houdini's python interface doesn't allow you to interactively see commands as you run them, so it takes some digging around at first to understand whats going on.

That said, once you get your head around it, python in houdini is quite nice. That should be a full page by itself really, but a nice trick is that you can drag anything into the houdini python console, and it'll appear as a proper python object path. Thats not just objects and lights, but bits of the interface too.

project structure

As of v14 you can now setup a workspace/project similar to maya, which is nice. It sets up unix style variables, so you can use $TEX/foo.exr to get to the texture paths, for example.

use http paths for textures, neat trick

cute trick of houdini; when defining paths to textures, they can be relative paths, or explicit paths, or paths with variables, or if you're feeling saucy, a direct http link. Nice for portable demos when sharing hip files on forums and in email.

mplay

Mplay is houdini's version of fcheck, which while getting long in the tooth, does most of what you need. Ram play sequences, apply luts, exposure, gamma, annotations, convert files, its handy to have in pinch.

implicit linear workflow everywhere

Well, it is. Mplay defaults to a viewer gamma of 2.2, internally mantra is all linear light. That's nice.

other interesting houdini features for lighter/lookdev types

some of these are probably mentioned above, but to summarise:

- buy a single seat of houdini, you get essentially unlimited mantra rendering on your farm. that's pretty sweet. (you still need to export your houdini scene into a .ifd per frame, to do this requires a license, so you'd need a few of these if you run lots of jobs on your farm)

- houdini ships with a simple farm manager for free called hqueue. hard to find info on people using it in production though.

- houdini has a built in node based compositor. its not nuke, but fine in a pinch.

- similarly, buy a single seat of houdini, you can use mplay on any machine on your network, as many as you want.

- sidefx do daily builds of their software, and respond very quickly to bug reports.

ok ok houdini is awesome. anything bad?

Yeah, a few things.

nodes are great/nodes are awful the 'nodes are awesome' motto it shares with nuke means it can suffer the same cry of horror as nuke: 'oh god, its full of nodes!' picking up a shot from someone else can be daunting at first, as you have to get your headspace into how they've setup their node network. even with annotations, coloured nodes, groups etc, other people's work can be tricky to decipher. only made worse by...

nested nodes Nuke comps can by scary, but they're flat. If there's a show-wide comp template, you have a fighting chance of being able to follow the node flow. Houdini doesn't allow certain nodes to co-exist on the same hierarchy (eg, you can't have sops and dops floating around together), so they can either live in their appropriate top level container (/obj, /chop, /out etc), or you can have subnets to keep similar things together. Great if used appropriately, but can get out of control if you're not careful. I've seen sop neworks, that have a dop subnet, with a shopnet under that, and a objnet under that with a camera, and a chopnet under_that_. Not easy to pull apart. If you have a lot of interdependency, you find yourself diving in and out of networks like a dolphin on crystal meth, or opening several node graphs and trying to follow connections between graphs. shader graphs are the worst, if left unchecked you can get 10 levels deep into shopnets, like playing a vop version of nethack.

no unified object/render graph same as above, but mentioned for emphasis. this is katana's big win; everything a lighter would want is all exposed in a single graph. in houdni you're always jumping between the /obj graph where your cameras/objects/lights are, and /rop where your render nodes are. you get limited gui tools to help confirm if your object render list really exists in the scene, or if your light has been renamed, or if this shader override will work. katana (from what I've been told anyway) makes all this mostly transparent. Sounds like this is being addressed in 15 though.

older complex modules don't have great docs - Sidefx have made great improvements in the last few years with the quality of their documentation. Mantra went from a massively underdocumented renderer to having pages similar in tone to Arnolds great help, like this page on sampling and noise. Dops and Chops still don't have any high level guides like this though, which makes it tricky to get going.

learning is endless, and deep, and ever changing - Related to the previous point, I wager a lot of the reason the docs lag behind is that Sidefx has never focused on winning over new users, they've largely focused on helping power users do cooler stuff. As such, they'll push things out at a cracking pace, often releasing stuff before the docs team have time to catch up, or expect the user base to find their own way. This happens with every release, so there's a certain amount of homework required to be an efficient Houdini user, otherwise you'll find yourself left behind on an older, unsupported technique. You can still create particle setups that date back to v9, or particle fluids from v10, or latest grain stuff from v15. The docs barely explain what is latest vs old, often the only way to know is via trawling the odforce forum.

You could kinda make that argument about most 3d apps, but Houdini can feel particularly alienating when you venture into the tricky stuff. I'm lucky enough to be able to ask a long suffering team of talented artists at work, so I have a support network of sorts, but it'd be hard going to learn this solo. SideFx are aggressively trying to make their software easy to pickup, but don't hide the details at the pointy end, and assume if you're poking around in dops gas microsolvers, you probably know what you're doing.

Houdini expects you've used Houdini before - The problem of course is if you don't have several years of Houdini behind you, and you haven't ramped up from old style pops, to new style dops, to new pop dops, to flip, to pyro, to pyro 2, to FEM, to cloth, to grain... it's a lot of ground to make up, and there's not much of a roadmap. The hour or multi-hour masterclasses on vimeo for certain modules are great, but some are out of date, and I still don't think I've seen a definitive explanation of how and why Dops work as they do. I'm getting there through repeated exposure and belligerence, but a proper guide would be nice.

$VAR vs @attr, hscript vs vex, point sop vs wrangle sop (and also python) - Its a hodge podge. $VAR, hscript, point sop are the 'traditional' way of doing things, they're limited performance wise, the syntax is a little hairy, but hey, they get stuff done. @attr, vex, wrangles are the modern way, are multithreaded, and more internally consistent, but its proving difficult to transition old users to the new ways, and there's always a corner case where users say 'see! can't do that with @attr/vex!', and rattle their point sops. Worse, a lot of the learning material out there, both in written form and video tuts, are still using the old style syntax, making it confusing as hell for new users. I suggest avoiding the old methods for as long as you can, at least until the 'zen of houdini' kicks in, and you get a better understanding of what you're doing. H15 also brought about a welcome change, in that you can use @attr syntax in a lot more places where traditionally you could only use $VAR, which is nice.

And then there's python. I don't think many apps could claim to support 3 languages (4 if you count C++, 5 if you include some of the low level UI stuff, 6 if you add XML in there too), but there it is. Unlike maya and nuke though, you generally don't need to know a lot of python to get stuff done in houdini. Its mainly for pipeline integration, otherwise again, skip it until you need it. There's also corners of the python API that aren't implemented yet (damn you hou.take), but they can mostly be worked around.

Still, it all adds up to a bit of a confusing mess re technique, hopefully it'll settle down in future to be 90% vex and @attrs, 8% python, and 2% hscript and $VAR for the grizzled old timers. 😉

Yet another glib summary

A mailing list was chatting about the pros and cons of swapping packages, I felt compelled to write this little blurb.

Something I always find interesting in the software debates is you always hear about people moving /to/ Houdini for whatever reasons, usually dissatisfaction with their current app. I don't think I've ever heard people move /from/ Houdini in the same angry tones.

You'll hear people (usually non-Houdini users) say Houdini is too hard, or too expensive, too slow, can't do this or that, but generally speaking the Houdini users themselves are a pretty happy productive bunch. Further, its not like the user base have only ever used Houdini and don't understand the pros and cons of the rest of the 3d scene. Nearly everyone started in Maya/Max/XSI/C4D/LW/Blender, got good at it, got frustrated at the limitations of that app, tried Houdini.

We're all capable of dropping back into Maya or whatever if it really is the fastest or easiest way to get things done, but as your Houdini skills sharpen, you end up opening Maya once a week, once a month, every 2 months, 6 months, then eventually not at all.

It's not for everyone, I couldn't imagine trying to do fast turnaround pack shots in it. But then a few years ago I couldn't have imagined motion graphics folk, or realtime games folk having any use for it either, from what I understand the're the fastest growing userbase within the Houdini community. I'm curious to see where it goes over the next few years.

I find it funny that I sound exactly like the cult joining, kool-aid drinking, Houdini superfan that I despised for years during my Maya days. Having burned through countless 3d apps over the years I thought I was immune to software zealotry, yet here I am, preaching away. Go figure.

Convinced?

Wow, you read this far? Well, you better head to HoudiniGettingStarted, and start learning stuff!